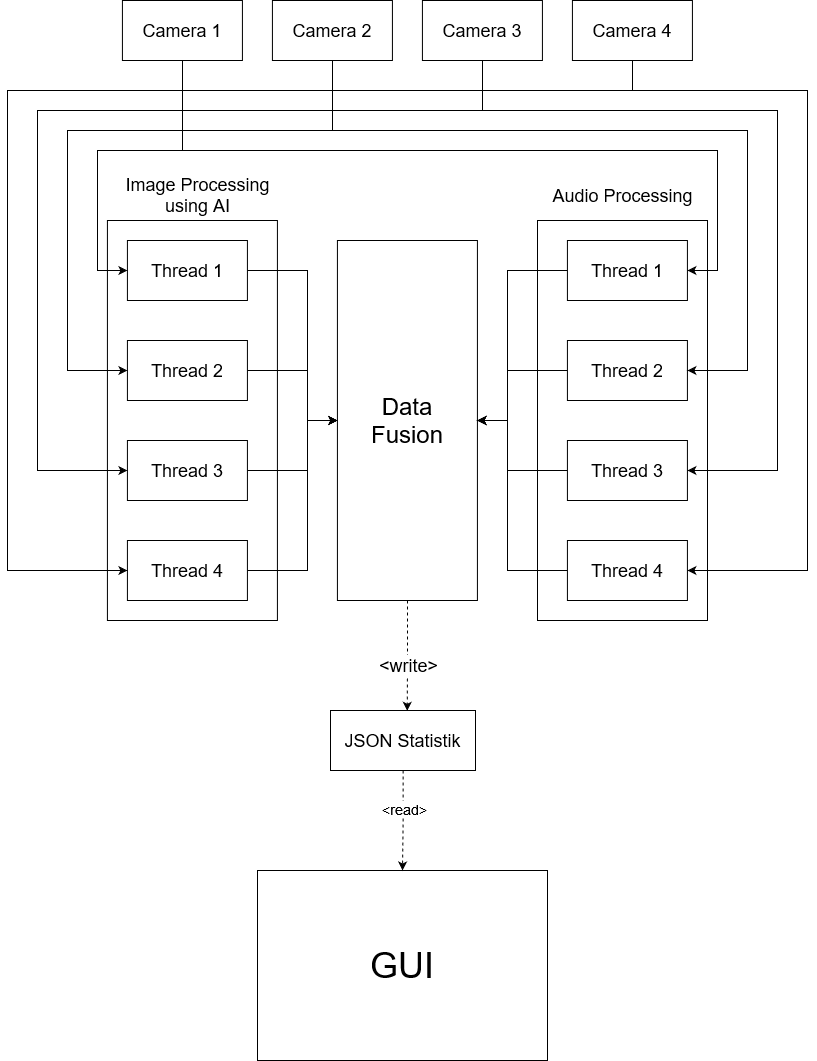

The videos are recorded in advance by the cameras. Audio files are extracted from the videos in a specific format (16 bit, 1 channel wave file). The sensor fusion software reads all the files and analyses them.

An AI model is used to recognise objects in the video files. OpenCV is used for this, as well as for image processing algorithms. The initial object recognition is followed by additional line recognition and team recognition of the players. These operations take place in the image processing thread.

With the help of an FFT library and a band-pass filter, the audio processing threads search for the referee's whistle in the extracted audio files of the videos. The whistles are used to better recognise goals, free kicks, fouls, etc. in combination with the videos. The whistles are essential to recognise the start of the match as well as half-time and the end.

All data processing threads deliver their results to the data fusion thread. There, the data is interpreted, corresponding events are derived from it and final match statistics are generated in JSON format.

The GUI for visualising the results is a tool independent of the sensor fusion software. It is used to visualise the statistics generated in JSON format and the generated videos with the detected objects.

created with

Joomla Page Builder .